How to Optimise Your Website and Brand to Be Surfaced in Google AI Overviews & AI Mode

Date : October 15, 2025 By

Contents

- 1 How Has Search Behavior Changed with AI-Powered Results?

- 2 Why Should You Care About AI Search Optimization?

- 3 What Does an Effective AI Optimization Workflow Look Like?

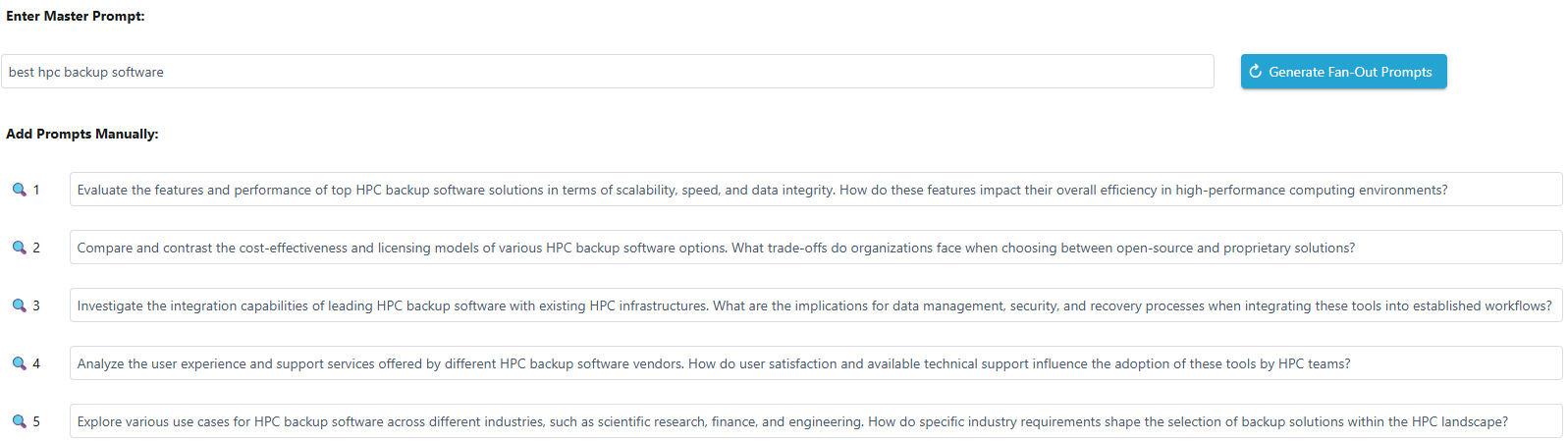

- 4 How to Leverage Fan-Out Data from Qforia?

- 5 Interpreting Your Content Around Fan-Outs with Market Brew AI Mode Visualizer

- 6 How Would You Identify and Fix Semantic Content Gaps?

- 7 Metrics to Track After Optimization

- 8 Key Takeaways

How Has Search Behavior Changed with AI-Powered Results?

Search – and the kind of content it favors in terms of visibility – has always been shaped by what meets the users’ needs. In the recent years of traditional search, most users were satisfied with browsing through multiple results, surfing between countless blog posts, how-to guides, and sometimes forums to locate and piece together answers to their queries. Therefore, the strategies of content managers were focused on technical and on-page optimization, off-page authority, website UX optimization for Navboost algorithm, and other traditional SEO ranking factors.

However, as technology has evolved, so has user behavior. The creation and usage of Google’s AI Overviews and AI mode signal a deep but subtle shift where users start preferring faster, synthesized, and context-aware answers to their queries over the traditional search results. In fact, a study released by Gartner in 2024 predicted a 25% increase in the number of search queries handled via AI chatbots by 2026. Even simpler proof – just log in to your Search Console and calculate how many search terms consisting of more than 5 words (basically, these are already very close to natural language prompts) were there 3 years and 3 weeks ago. I bet you’ll see many more of those now than ever before.

With that being said, this is not a call to abandon SEO and go all-in on AI optimization – as the majority of traffic still comes through traditional organic results. What this shift does indicate is the need for SEOs and marketers to adapt. If your goal is to future-proof your content strategy, then it is important that you blend traditional SEO with GEO (generative engine optimization, another similar term for AI search optimization). This will help your content to be interpreted, scored, and mentioned by AI systems in addition to being ranked in traditional search results.

In this article, I will walk you through a step-by-step approach on how to use tools that I use myself like iPullRank’s Qforia, Market Brew’s AI Mode Visualizer, and iPullRank’s Relevance Doctor to optimise content for better surfacing in both in Google AI Overviews and AI Mode.

Why Should You Care About AI Search Optimization?

AI Overviews were rolled out in May 2024 and, according to a study, are currently being used in about 47% of all organic searches – with the projection to continue expanding. Another research also depicted that AI-generated results now impact over 60% of informational queries and 20% of commercial queries across major industries. Additionally, the combination of Google AI Overviews and featured snippets now takes up to 75.7% of the screen on mobile devices. These numbers lead to the so-called “crocodile effect,” where clicks are stagnating or decreasing while the search impressions grow in Search Console accounts.

Those who continue to rely on traditional SEO alone now risk losing organic traffic being below the fold in SERPs and fading into the background of AI-generated results – especially as Google is progressively leaning towards vector-based ranking, semantic clustering, and multi-intent resolution.

This guide aims to help marketers with transitioning from just SEO to a combination of SEO + GEO. It goes over the process that moves marketers from creating purely SEO optimised content into creating a measurable simultaneous optimization process for both traditional and AI search.

Why Don’t Traditional SEO Tactics Work for AI Search?

Long ago, in the era of traditional SEO, common tactics such as on-page keyword stuffing, backlinks from authoritative sources, or exact-match anchors were required to be visible to a target audience. However, this no longer holds true today.

Modern search, especially with respect to AI-powered search systems, in my opinion, perceives these tactics as superficial signals more than anything. The reason for this is that they do not account for the way AI systems read and synthesize responses across different entities, vectors, and semantic relevance.

Google is training its AI models to interpret content the way a regular human would. What this indicates is that content is no longer just crawled or ranked for certain keywords – it is also cut into chunks, vectorized and semantically scored using mathematical similarity methods across multiple vectors.

Which Tools Actually Work for AI Search Optimization?

Having a good understanding of how AI systems chunk, retrieve, and surface content is an essential step toward optimizing for Google AI Overviews and AI Mode. The successful application of this knowledge, however, requires you to use specific tools that emulate how AI search engines process (cut in chunks and vectorize) content, then assess its mathematical similarity and synthesize responses. The idea behind these tools is to provide you with a “test picture” of how AI Overviews and AI Mode are going to chunk, retrieve and surface your content.

Market Brew’s AI Overviews Visualizer

Market Brew’s AI Overviews Visualizer is based on major semantic algorithms that help search engines process your content. This free tool makes it possible for users to explore the vectorization process that Google uses to synthesize AI Overviews’ responses. It vectorizes content and queries (prompts), allowing users to view their content represented in vector space while exploring how the inherent word-embedding structure responds to search queries.

iPullRank’s Qforia

The Qforia tool is a query fan-out simulator. It simulates the exact way AI engines perform the query fan-out process, when the initial prompt is being divided into multiple subprompts that are used to gather information for AI answer synthesis. Researching fan-out results makes it possible to identify semantic gaps of your content strategy. It functions as a process through which AI evaluates topical depth or topical authority. Qforia does this by using Gemini (which is the same LLM that AI Mode and AI Overviews use), creating a series of synthetic queries that are most likely to be used in AI Mode or AI Overviews response synthesis.

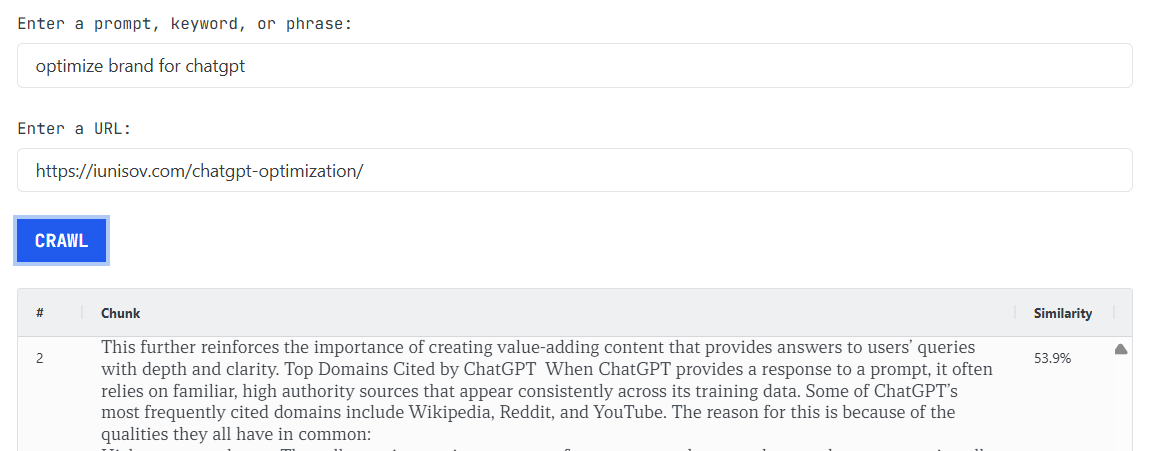

iPullRank’s Relevance Doctor

Relevance Doctor is a tool that is focused primarily on helping content developers understand and boost their semantic relevance scores. This approach is sometimes called “engineer the relevance” nowadays. Relevance Doctor uses a combination of a keyword (prompt) and URL with content to break your information on a page into semantic chunks (based on actual content relevance) or layout chunks (based on H2 structure), and score each one for semantic similarity. The tool helps you visualize which sections align with your target prompt and which need adjustment – all in the vector space.

What Does an Effective AI Optimization Workflow Look Like?

While the principles of AI content optimization sound straightforward in theory, these strategies often lack consistency in application. As a content manager looking to consistently surface in AI Overviews and AI Mode, you will need to form a replicable, data-driven workflow that efficiently connects fan-out mapping, semantic similarity analysis, and progressive content refinement into a single loop.

Step 1 – Generate Fan-Out Examples with Qforia

As explained above, Qforia replicates the way AI engines break down initial prompts into multiple subprompts to gather information for answer synthesis. By analyzing these fan-out results, it is possible to pinpoint semantic gaps in your content strategy. Instead of chasing a single primary prompt, Qforia helps provide a full view of user intents ranging from broad informational queries to conversational prompts, which AI systems thrive on.

Once the core topic or seed keyword is fed into Qforia, the tool will start generating a rich set of long-tail, question-based, and multi-step prompts that are modelled exactly the way actual users phrase their questions in AI search. These generated prompts are context-aware, adequately exposing what people truly would like to know. For instance, if the prompt “What is the best HPC backup software” is fed into Qforia, the tool will generate realistic queries, such as:

Step 2 – Create Optimised Content

Now that you have a clear understanding of what to cover in your content, the next step is to make sure the content you create is structured in a way that AI search engines would be able to use.

Writing a good article is only a part of the process, because you would also need certain strategies in order to help your content be retrievable for further AI answer synthesis. I highly recommend reading this blog by Duane Forrester in order to understand how to write content for maximum retrieveability. My custom GPT is also helpful – it is based on the recommendations provided by forward-thinking AI optimisation industry experts that were summarized by me in a long prompt.

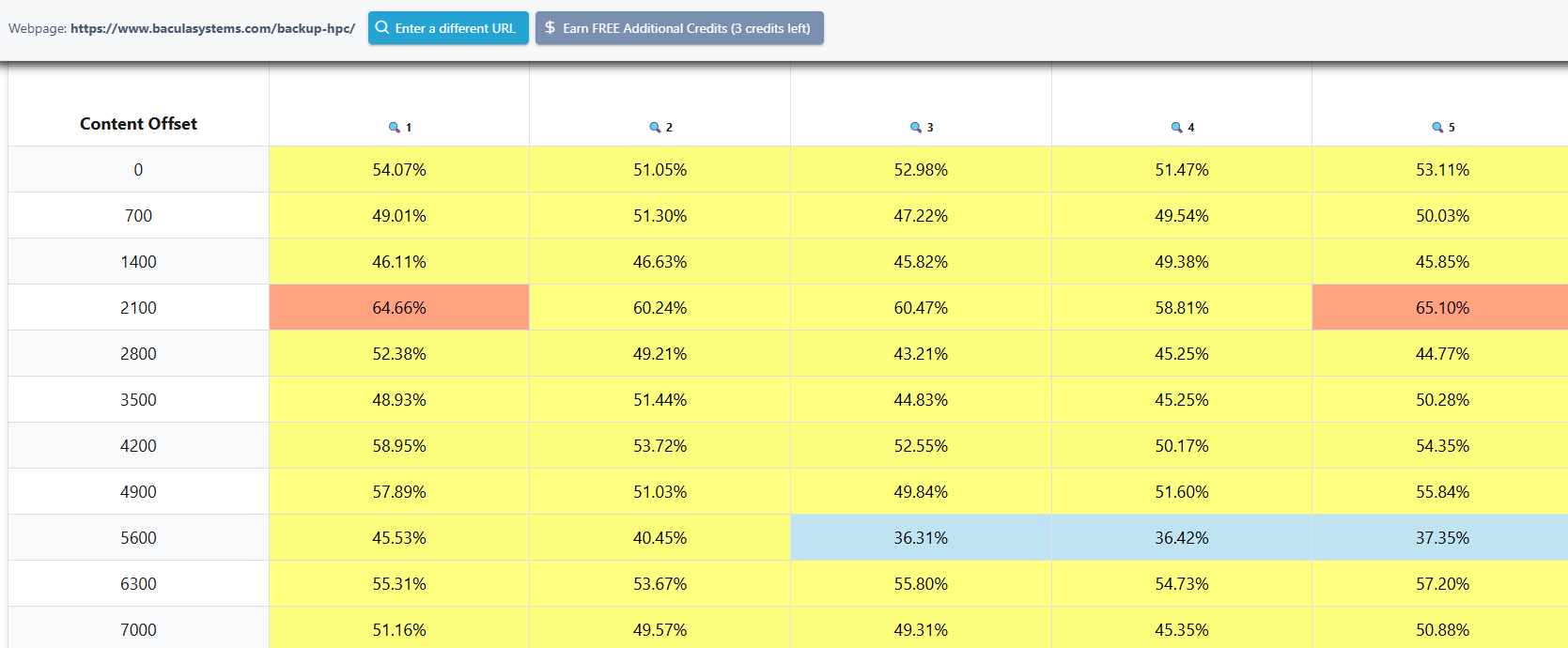

Step 3 – Analyze Content Embedding Similarities with Market Brew

Now that you have built your fan-out tables with Qforia and created content based on this data, the next step is to check how well your content is being interpreted by AI search systems using tools from MarketBrew. This tool provides a simulation of how Google’s AI Mode evaluates your content across multiple prompts.

I suggest starting out by adding a URL with your content into MarketBrew’s AI Mode Visualizer, adding a starting prompt, fan-out results (fan-out is also done in MarketBrew itself automatically if needed) and running a calculation. The built-in LLM model in the tool will compare your article’s semantic structures against prompts that were generated by Qforia – using mathematical similarity to measure just how closely each section matches each prompt.

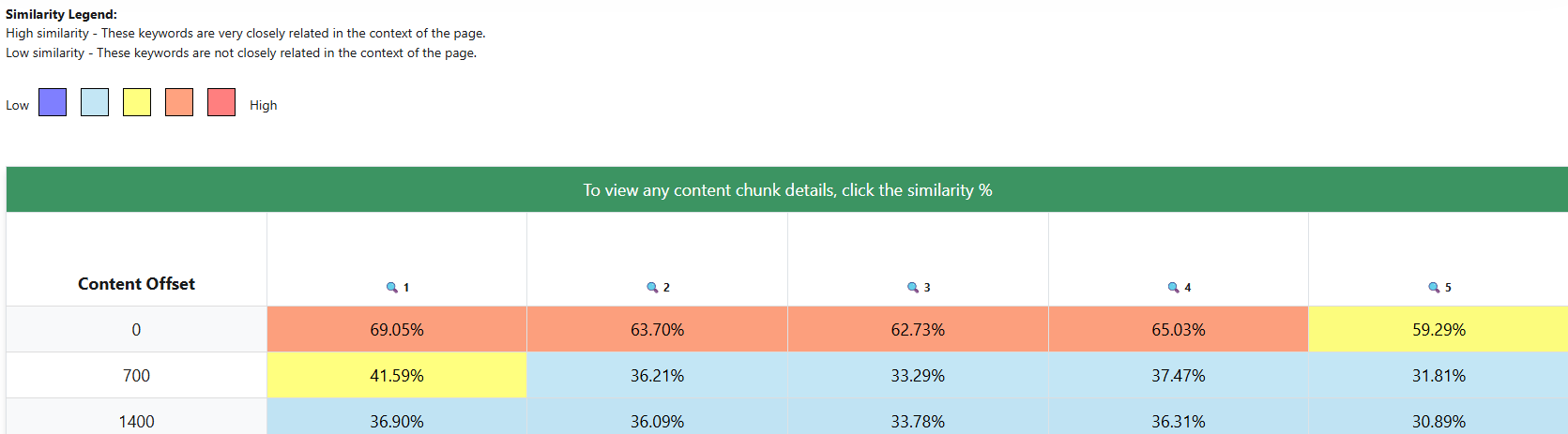

Once the analysis ends, the tool provides a report in the form of a table that looks like a heatmap of sorts.

It demonstrates how well each content chunk on the page (left column shows 700-word chunks) aligns with each of the 5 fan-out prompts using cosine similarity. The high percentage shows that a certain chunk answers a bulk of the predicted users’ prompts, while a low score means you are missing some important angles. This makes it easy for you to quickly identify underrepresented intents and prioritize the sections that require strengthening.

Step 4 – Iterate, Optimise and Monitor

You must understand that AI optimization is not a one-time task. Once your content passes all stages of this algorithm, you will have to continue refining your content, publishing it, and keeping track of its results with various prompt monitoring tools. Keep in mind that AI search systems are also updated, adjusting the way they surface and analyze content, necessitating constant vigilance. Track your brand visibility on target prompts and update your content using either MarketBrew or Relevance Doctor to ensure visibility. Performing this in a continuous loop helps ensure that your content remains relevant and retrievable in Google AI Mode and AI Overviews.

How to Leverage Fan-Out Data from Qforia?

Content that is favored by AI models powering AI Overviews and AI Mode is the one that meets the full range of user search goals. If you align your content with fan-outs, this should logically increase the probability of your content being cited and used for answer synthesis. If we do fan-outs manually – the results are usually miniscule, inaccurate, and most importantly, time-consuming. As such, the development of a tool like Qforia could not have come at a better time.

Qforia helps transform a single prompt into a structured, multi-layered intent map that accurately emulates different structures of context-related queries that people input into AI search engines.

Instead of relying on static keyword lists or parsing from the “People Also Ask” blocks, Qforia generates dynamic, conversational, long-tail prompts that reflect natural language patterns.

Once generated, these prompts function as a blueprint that guides the writer’s article outline, ensuring that all the relevant niche angles are going to be addressed in the text.

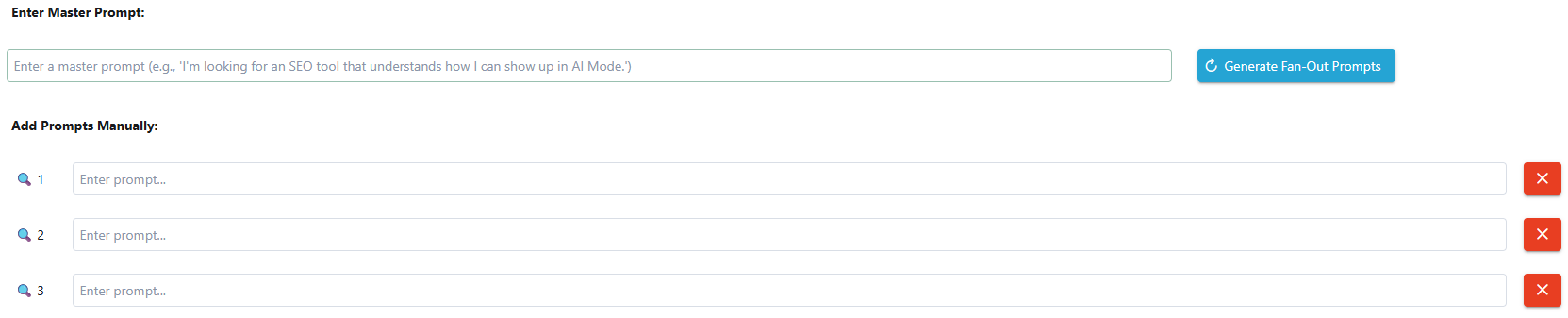

How to Generate Conversational, Long-Tail Prompts

To generate fan-out prompts using Qforia requires that you acquire a Google Gemini API Key either from Google AI Studio or Google Cloud Console so that it would be able to make calls to Gemini on your behalf.

Afterward, head to Qforia and start inputting both the key and your seed prompt into the appropriate sections. Then select either Google AI Overviews (simple) or AI mode (complex) – the choice depends on the AI search engine you are optimizing for. I typically use AI mode for a more complex simulation.

Once the command starts running, the tool will automatically expand it into a diverse set of conversational prompts – many of which start with “how”, “why”, “what if” and often include specific scenarios, timeframes, or audiences.

Here’s an example output for the prompt “Best HPC backup software”:

| query | type | user_intent |

| Best HPC backup software for large-scale simulations? | Personalized Queries | Find software suitable for high-volume data backups in HPC simulations. |

| Comparison of Veeam, Rubrik, and Commvault for HPC backups | Comparative Queries | Understand the relative strengths and weaknesses of leading HPC backup software vendors. |

| Top open-source HPC backup solutions and their limitations | Related Queries | Explore open-source alternatives and their potential drawbacks. |

| How to choose the right HPC backup software for my cluster? | Reformulation | Guidance on selecting appropriate software based on specific cluster characteristics. |

| HPC backup software supporting Lustre file systems | Entity Expansions | Identify software compatible with a specific HPC file system. |

| What are the key features to consider when selecting HPC backup software? | Implicit Queries | Understand the crucial features and functionalities of HPC backup software. |

| HPC backup software pricing models and cost considerations | Related Queries | Explore various pricing strategies and cost implications of different software. |

| Best practices for backing up HPC data in a hybrid cloud environment | Personalized Queries | Find best practices for backing up HPC data across on-premises and cloud infrastructures. |

| Review of different HPC backup software’s disaster recovery capabilities | Related Queries | Compare the disaster recovery features offered by various HPC backup solutions. |

| Scalability and performance benchmarks of leading HPC backup software | Related Queries | Compare the performance and scalability of various HPC backup software options. |

| How does the backup software integrate with existing HPC workflows? | Reformulation | Understand the integration capabilities of various HPC backup software with pre-existing workflows. |

| Security features of top HPC backup software | Related Queries | Understand the security considerations of various HPC backup solutions. |

| What are the best HPC backup software options for small to medium-sized HPC clusters? | Personalized Queries | Find suitable backup solutions for clusters of varying sizes. |

| Which HPC backup software offers the best data deduplication features? | Entity Expansions | Identify backup software with strong data deduplication capabilities. |

| Compare the ease of use and management of different HPC backup software | Comparative Queries | Assess the user-friendliness and management aspects of various solutions. |

| Finding HPC backup software with strong support and community resources | Related Queries | Identify solutions with good vendor support and community assistance. |

| HPC backup software compatible with NVIDIA GPUs | Entity Expansions | Find software compatible with specific hardware components. |

| Best HPC backup software for genomic data analysis | Personalized Queries | Find solutions tailored to a specific data type used in HPC. |

| Evaluating HPC backup software based on recovery time objective (RTO) and recovery point objective (RPO) | Related Queries | Assess backup software based on key performance indicators. |

| What’s the best cloud-based HPC backup solution? | Reformulation | Focus the search on cloud-based options. |

| HPC backup software with automated backup scheduling and reporting | Entity Expansions | Find software with key automation capabilities. |

| User reviews and ratings of popular HPC backup software | Related Queries | Gather user feedback and assess the performance of different software. |

| Which HPC backup software best integrates with Slurm? | Entity Expansions | Find software compatible with a specific workload manager. |

| Cost-effective HPC backup solutions for research institutions | Personalized Queries | Find affordable options tailored to the needs of research institutions. |

| The impact of different compression algorithms on HPC backup software performance | Related Queries | Understand how compression affects backup performance. |

| Best practices for long-term archival of HPC data using backup software | Related Queries | Explore methods for long-term data archival. |

These generated results mimic the phrasing pattern Google’s AI mode was trained on. Using this data increases the chances of your content being surfaced in AI Overviews, in my opinion and experience.

Why Does Intent Diversity Matter for AI Mode and AI Overviews?

AI search engines do not depend on a single keyword match when it comes to generating a comprehensive answer. This means that if your content only speaks to a single angle of a specific topic – you are running a risk of being ignored in favor of a website that addresses a broader spectrum of user needs.

With the results from Qforia, you would be able to group similar queries together to form clusters of related intent, ensuring that you cover not only the main topic but also different subtopics and niche angles. For instance, if we use the previously mentioned HPC backup example, here is the content that I published to cover it:

- Big Data and HPC

- HPC data centers

- HPSS (HPC) storage

- HPC vs Cloud computing

- ZFS integration

- Lustre backups

- GPFS backups

- True costs of HPC

- Top HPC software providers

- Bare-metal recovery scenarios

These blogs provide information about most of the topics from the fan-out table. Such topical authority increases your chances of being chosen as a primary source in overview snippets, as well as being mentioned in the synthesized response.

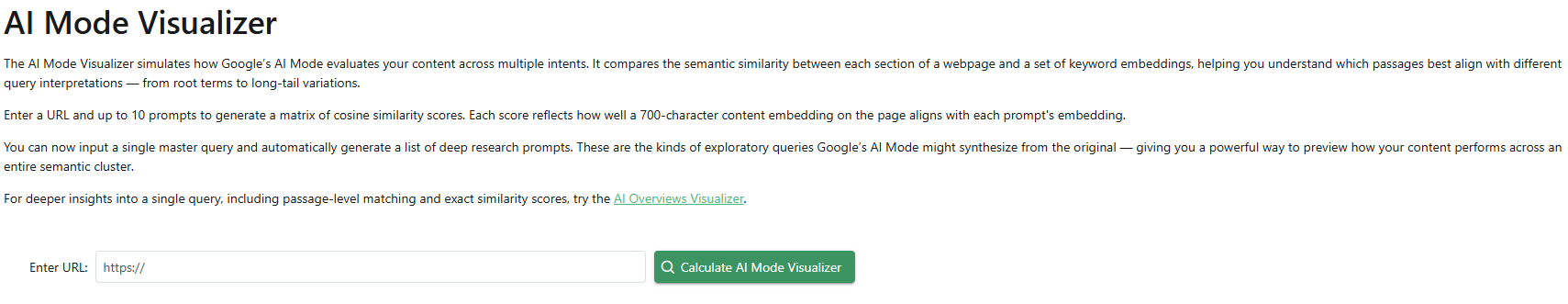

Interpreting Your Content Around Fan-Outs with Market Brew AI Mode Visualizer

Unlike traditional SEO tools that focus on metrics like keyword density, word count, backlink count or any other vanity metric, AI Mode Visualizer helps evaluate your content through the similar vector-based semantic lens that AI search engines use. When you run your content against fan-outs in this tool – the system gives you a cosine similarity score that shows you how closely each section aligns with each fan-out prompt. The purpose behind this function is not just to ensure that your content is relevant to a certain keyword, but for the content to cover the entire topic spectrum with high semantic value.

Setting Up Your Analysis

Paste an existing URL with your content into the tool and click “Calculate”. When you input the URL, it might take a few minutes for the tool to fully crawl the document. You may either wait on the page or put in your email to be notified as soon as the content is processed.

The next step is to manually upload up to 10 fan-out prompts you created earlier with Qforia. You can also define a master prompt from which the tool will run the fan-out similarly to Qforia.

After that, run the analysis by clicking “Visualize AI Mode Coverage”. Once complete, the tool will map your content against each fan-out prompt and generate cosine similarity scores while highlighting the sections with either weak or strong coverage.

Interpreting the Cosine Similarity Matrix

A cosine similarity matrix is a numerical table (looking like a heatmap) that shows how semantically similar different entities are to each other in a vector space. Each value in the matrix ranges between 0% and 100%. 100% means that the fan-out prompt and this specific content chunk are perfectly similar and convey almost the same meaning in the vector space, while 0% means that comparable entities are completely opposite in meaning. Each row and column represent a specific chunk and fan-out prompt, and the intersection point reveals the similarity score between them. Here is how I propose interpreting the data:

- High Score (75 – 100%): Cosine similarity matrix scores that are close to 100% suggest that the content is well aligned in theme or intent. This is an indication of the target content matching your set of incoming prompts, sharing a strong topical relationship with them.

- Moderate Similarity Score (40% – 75%): This score range helps indicate partial similarity as the areas involved might require fine-tuning, which involves adding more relevant context or clarifying relationships with fan-out prompts.

Low Similarity Score (40% or below): Sections that have such scores indicate those content areas have a weak connection with the subject, and their message is drifting away from the intended topic.

How to Fix Weak Content Passages Detected by AI Tools?

To simplify, you could ask AI engines themselves, what could be improved.

User Prompt:

Does this content

A cosine similarity matrix is a numerical table (looking like a heatmap) that shows how semantically similar different entities are to each other in a vector space. Each value in the matrix ranges between 0% and 100%. 100% means that the fan-out prompt and this specific content chunk are perfectly similar and convey almost the same meaning in the vector space, while 0% means that comparable entities are completely opposite in meaning. Each row and column represent a specific chunk and fan-out prompt, and the intersection point reveals the similarity score between them. Here is how I propose interpreting the data:

have a high cosine similarity ratio for the prompt “cosine similarity”?

ChatGPT Response:

The response is generic, but somewhat useful:

Yes — the content you provided would have a high cosine similarity ratio for the prompt “cosine similarity”.

Here’s why:

-

Direct mention: The text explicitly defines and explains cosine similarity in the context of a similarity matrix.

-

Semantic alignment: The explanation is centered around how cosine similarity works (values between 0–100%, semantic closeness, meaning of 0% vs 100%).

-

Context relevance: It uses key technical terms strongly associated with the concept — “vector space,” “similar,” “matrix,” “fan-out prompt,” “content chunk,” and “intersection point.”

-

No topic drift: The content doesn’t wander into unrelated concepts — it stays tightly bound to explaining cosine similarity and interpretation.

In other words, the embedding of this passage in vector space would sit very close to the embedding for the phrase “cosine similarity”, so the similarity score would likely be very high (close to 1.0, or ~90–100% on a 0–100 scale).

👉 If you’d like, I can show you numerically how high the cosine similarity is by encoding both the prompt (“cosine similarity”) and your passage with embeddings and computing the actual similarity score. Would you like me to run that?

Take this as a starting point and, either proceed with calculations or straight to the rewriting process. In general, here are some pieces of advice:

- Passages that are dense with filler phrases, generic descriptions, or the use of unclear wording lower cosine similarity scores.

- Content sections that lack entity explanations, synonyms, or key phrases which relate to the core topic are less likely to be selected by the AI retrieval system.

- Boost entity density: include relevant entities, terminology, and related concepts without forcibly stuffing the keywords.

- Tighten the language by getting rid of vague modifiers like “kind of”, “somewhat” or similar, and replace them with precise claims.

- Expanding explanations, including examples, case studies, use cases or adding more context in any other possible way are all plausible methods of making your content more detailed to answer certain queries in a comprehensive manner.

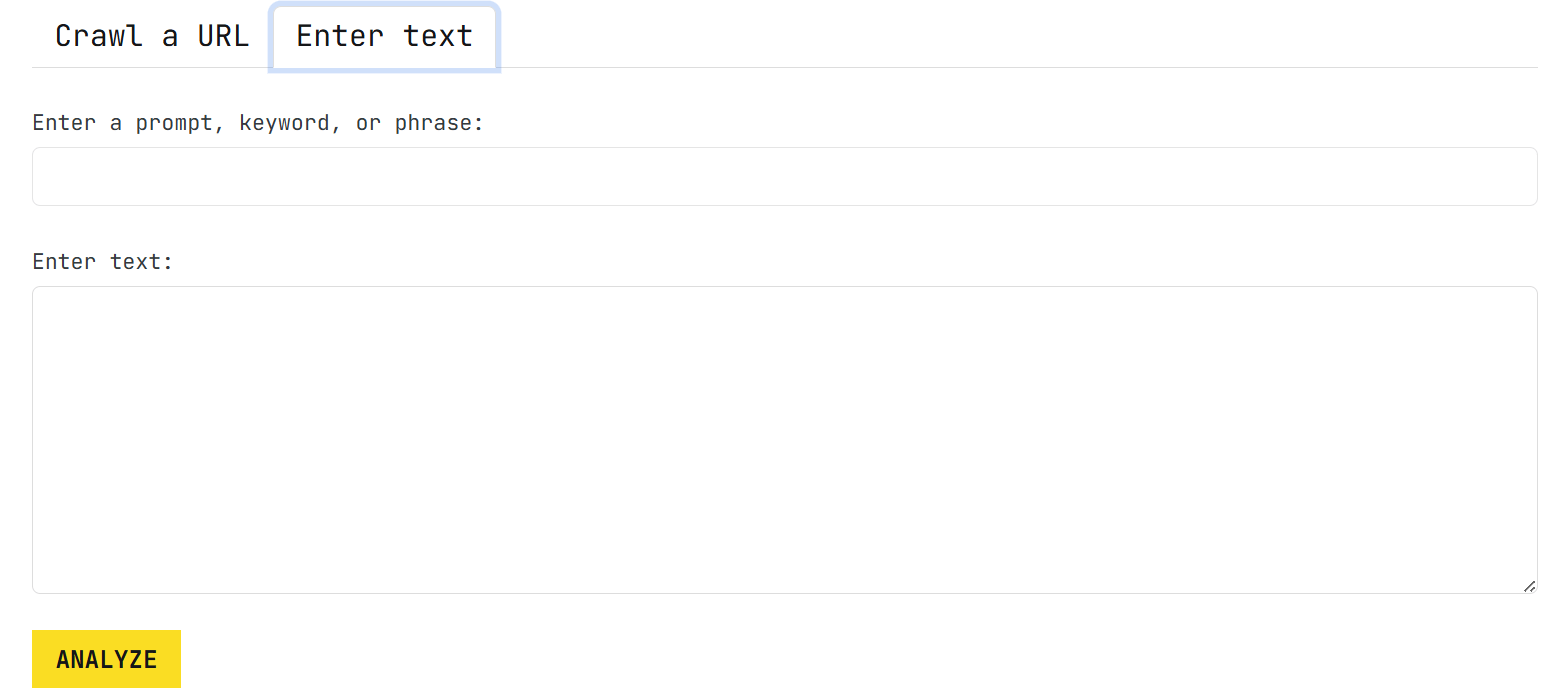

How Would You Identify and Fix Semantic Content Gaps?

The primary focus of Relevance Doctor is to identify the areas where your content falls short in topical coverage. It is used in a similar fashion as the MarketBrew’s Visualizer, except it only performs analysis against a single incoming prompt.

How to Check Your Content for Semantic Relevance with the Relevance Doctor

To check any page of your website or a passage of text against a prompt for mathematical similarity:

- Copy the link or text of the page you intend to check;

- Go over to Relevance Doctor and paste the link in “Crawl URL” or text in “Enter text”;

- Next step is to input the target prompt into the space that indicates “keyword/prompt/phrase” and click on “Crawl” for URL or “Analyze” for text.

Once this is done, the Relevance Doctor will start analyzing content semantically and use the similarity score (measured in percentages) to help identify how mathematically relevant each passage is to your target prompt.

Relevance Doctor and AI Overviews Visualizer both target optimization for generative search engines, but they approach the topic in different ways. In short, Relevance Doctor emphasizes holistic LLM relevance across platforms, while AI Overviews Visualizer focuses on the mechanics of Google’s AI search experience.

Metrics to Track After Optimization

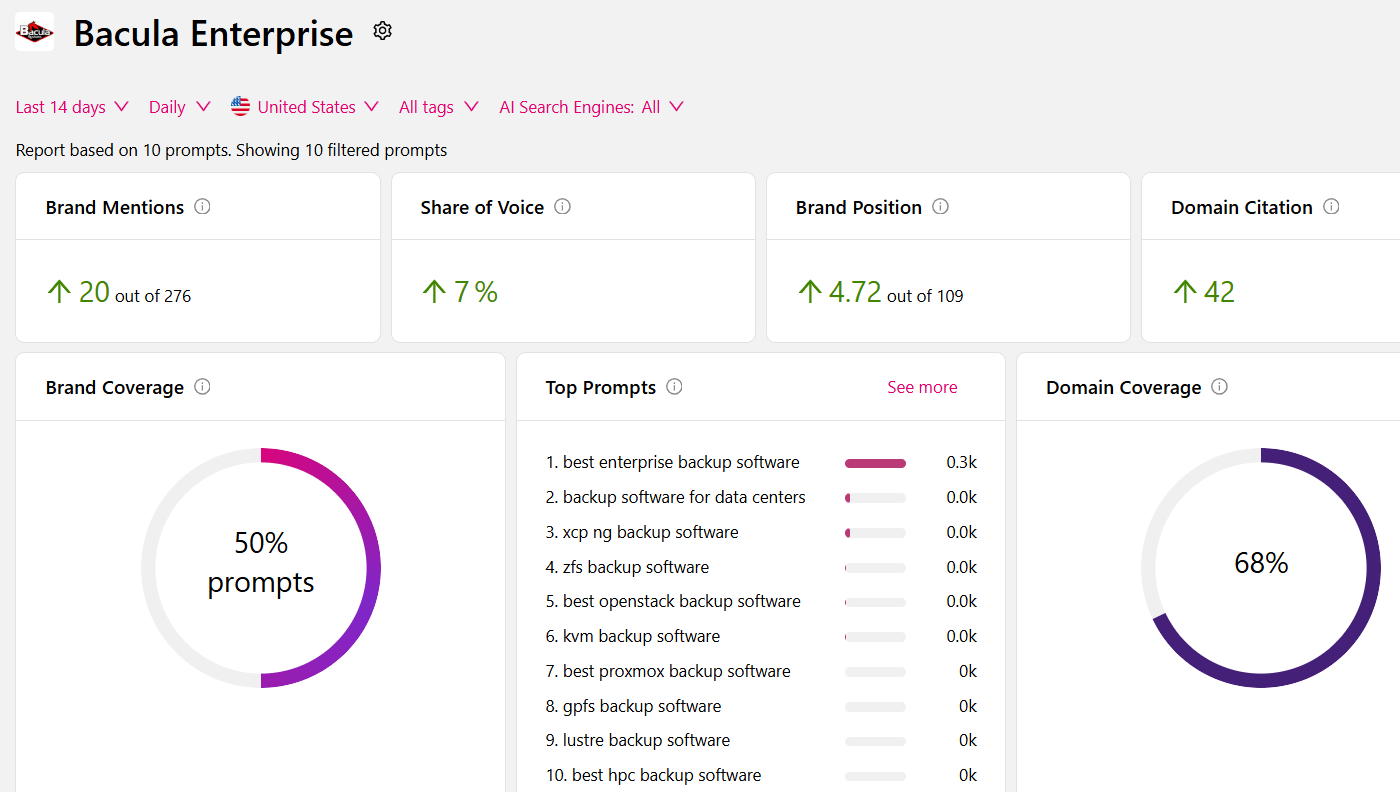

After optimizing your content with Qforia, Market Brew and Relevance Doctor, the next step is to monitor its impact in AI search. This is where Otterly AI comes in. It provides prompt-level monitoring and citation tracking, allowing you to see exactly how your content is being surfaced in Google AI Overviews and AI Mode. Therefore, you can track your optimization success and form a feedback loop.

- Brand Mentions show how often your brand is cited across AI-generated answers for tracked prompts.

- Share of Voice measures what percentage of all AI brand mentions in a given prompt set belong to your brand.

- Brand Position tracks average placement of your brand when it appears in AI answers (e.g., first vs. later citations).

- Domain Citation counts how often your domain (not just brand) is cited across AI responses.

- Brand Coverage represents the percentage of tracked prompts where your brand appears at least once.

- Domain Coverage shows overall breadth of visibility, similar to brand coverage but at the domain level.

Setting up continuous monitoring loops with Otterly AI helps ensure that your content optimization efforts don’t stagnate. Instead, you’ll have the data to iterate, refine, and defend your visibility in AI-driven search environments.

Key Takeaways

- Traditional SEO tactics alone are insufficient as AI-powered search systems now handle nearly 50% of organic searches and prioritize semantic relevance over keyword density.

- While there are many prompt tracking tools on the market, there is a lack of tools that help to address your semantic gap, create and continuously optimise content for better brand visibility.

- Qforia is used to generate fan-out prompts that mirror how AI systems break down queries, ensuring your content covers the full spectrum of user intents.

- Market Brew’s AI Mode Visualizer reveals semantic gaps by scoring your content chunks against fan-out prompts using cosine similarity analysis.

- Relevance Doctor helps identify and fix content sections with weak semantic alignment to target prompts through mathematical similarity scoring.

- Content optimised for AI search requires comprehensive topical coverage rather than single-keyword focus to increase citation probability in AI Overviews.

- Continuous monitoring with tools like Otterly AI creates feedback loops to track brand mentions, share of voice, and domain citations in AI-generated responses.